import cv2

import mediapipe as mp

mp_holistic = mp.solutions.holistic

mp_drawing = mp.solutions.drawing_utils

mp_drawing_styles = mp.solutions.drawing_styles

mp_hands = mp.solutions.hands

IMAGE_FILES = []

###################################################################################

# # motion_hands

# with mp_hands.Hands(

# static_image_mode=True,

# max_num_hands=2,

# min_detection_confidence=0.5) as hands:

#

# for idx, file in enumerate(IMAGE_FILES):

# # Read an image, flip it around y-axis for correct handedness output (see

# # above).

# image = cv2.flip(cv2.imread(file), 1)

# # Convert the BGR image to RGB before processing.

# #results1 = hands.process(cv2.cvtColor(image, cv2.COLOR_BGR2RGB))

#

# # Print handedness and draw hand landmarks on the image.

# # print('Handedness:', results1.multi_handedness)

#

# if not results1.multi_hand_landmarks:

# continue

# #image_height, image_width, _ = image.shape

# #annotated_image = image.copy()

#

# for hand_landmarks in results1.multi_hand_landmarks:

# print('hand_landmarks:', hand_landmarks)

# print(

# f'Index finger tip coordinates: (',

# f'{hand_landmarks.landmark[mp_hands.HandLandmark.INDEX_FINGER_TIP].x * image_width}, '

# f'{hand_landmarks.landmark[mp_hands.HandLandmark.INDEX_FINGER_TIP].y * image_height})'

# )

# mp_drawing.draw_landmarks(

# annotated_image,

# hand_landmarks,

# mp_hands.HAND_CONNECTIONS,

# mp_drawing_styles.get_default_hand_landmarks_style(),

# mp_drawing_styles.get_default_hand_connections_style())

#

# cv2.imwrite(

# '/tmp/annotated_image' + str(idx) + '.png', cv2.flip(annotated_image, 1))

# # Draw hand world landmarks.

# if not results1.multi_hand_world_landmarks:

# continue

# for hand_world_landmarks in results1.multi_hand_world_landmarks:

# mp_drawing.plot_landmarks(

# hand_world_landmarks, mp_hands.HAND_CONNECTIONS, azimuth=5)

#############################################################################

# For static images:

with mp_holistic.Holistic(

static_image_mode=True,

model_complexity=2,

enable_segmentation=True,

refine_face_landmarks=True) as holistic:

hands = mp_hands.Hands(

model_complexity=0,

min_detection_confidence=0.5,

min_tracking_confidence=0.5)

for idx, file in enumerate(IMAGE_FILES):

image = cv2.imread(file)

image_height, image_width, _ = image.shape

# Convert the BGR image to RGB before processing.

results = holistic.process(cv2.cvtColor(image, cv2.COLOR_BGR2RGB))

######################### hands image setting ###################################

results1 = hands.process(cv2.cvtColor(image, cv2.COLOR_BGR2RGB))

print('Handedness:', results1.multi_handedness)

# if not results1.multi_hand_landmarks:

# continue

#

# for hand_landmarks in results1.multi_hand_landmarks:

# print('hand_landmarks:', hand_landmarks)

# print(

# f'Index finger tip coordinates: (',

# f'{hand_landmarks.landmark[mp_hands.HandLandmark.INDEX_FINGER_TIP].x * image_width}, '

# f'{hand_landmarks.landmark[mp_hands.HandLandmark.INDEX_FINGER_TIP].y * image_height})'

# )

# mp_drawing.draw_landmarks(

# annotated_image,

# hand_landmarks,

# mp_hands.HAND_CONNECTIONS,

# mp_drawing_styles.get_default_hand_landmarks_style(),

# mp_drawing_styles.get_default_hand_connections_style())

#

# # Draw hand world landmarks.

# if not results1.multi_hand_world_landmarks:

# continue

# for hand_world_landmarks in results1.multi_hand_world_landmarks:

# mp_drawing.plot_landmarks(

# hand_world_landmarks, mp_hands.HAND_CONNECTIONS, azimuth=5)

######################### hands image setting ###################################

if results.pose_landmarks:

print(

f'Nose coordinates: ('

f'{results.pose_landmarks.landmark[mp_holistic.PoseLandmark.NOSE].x * image_width}, '

f'{results.pose_landmarks.landmark[mp_holistic.PoseLandmark.NOSE].y * image_height})'

)

annotated_image = image.copy()

# Draw segmentation on the image.

# To improve segmentation around boundaries, consider applying a joint

# bilateral filter to "results.segmentation_mask" with "image".

condition = mp.stack((results.segmentation_mask,) * 3, axis=-1) > 0.1

bg_image =mp.zeros(image.shape, dtype=mp.uint8)

bg_image[:] = BG_COLOR

annotated_image = mp.where(condition, annotated_image, bg_image)

# Draw pose, left and right hands, and face landmarks on the image.

mp_drawing.draw_landmarks(

annotated_image,

results.face_landmarks,

mp_holistic.FACEMESH_TESSELATION,

landmark_drawing_spec=None,

connection_drawing_spec=mp_drawing_styles

.get_default_face_mesh_tesselation_style())

mp_drawing.draw_landmarks(

annotated_image,

results.pose_landmarks,

mp_holistic.POSE_CONNECTIONS,

landmark_drawing_spec=mp_drawing_styles.

get_default_pose_landmarks_style())

cv2.imwrite('/tmp/annotated_image' + str(idx) + '.png', annotated_image)

# Plot pose world landmarks.

mp_drawing.plot_landmarks(

results.pose_world_landmarks, mp_holistic.POSE_CONNECTIONS)

# For webcam input:

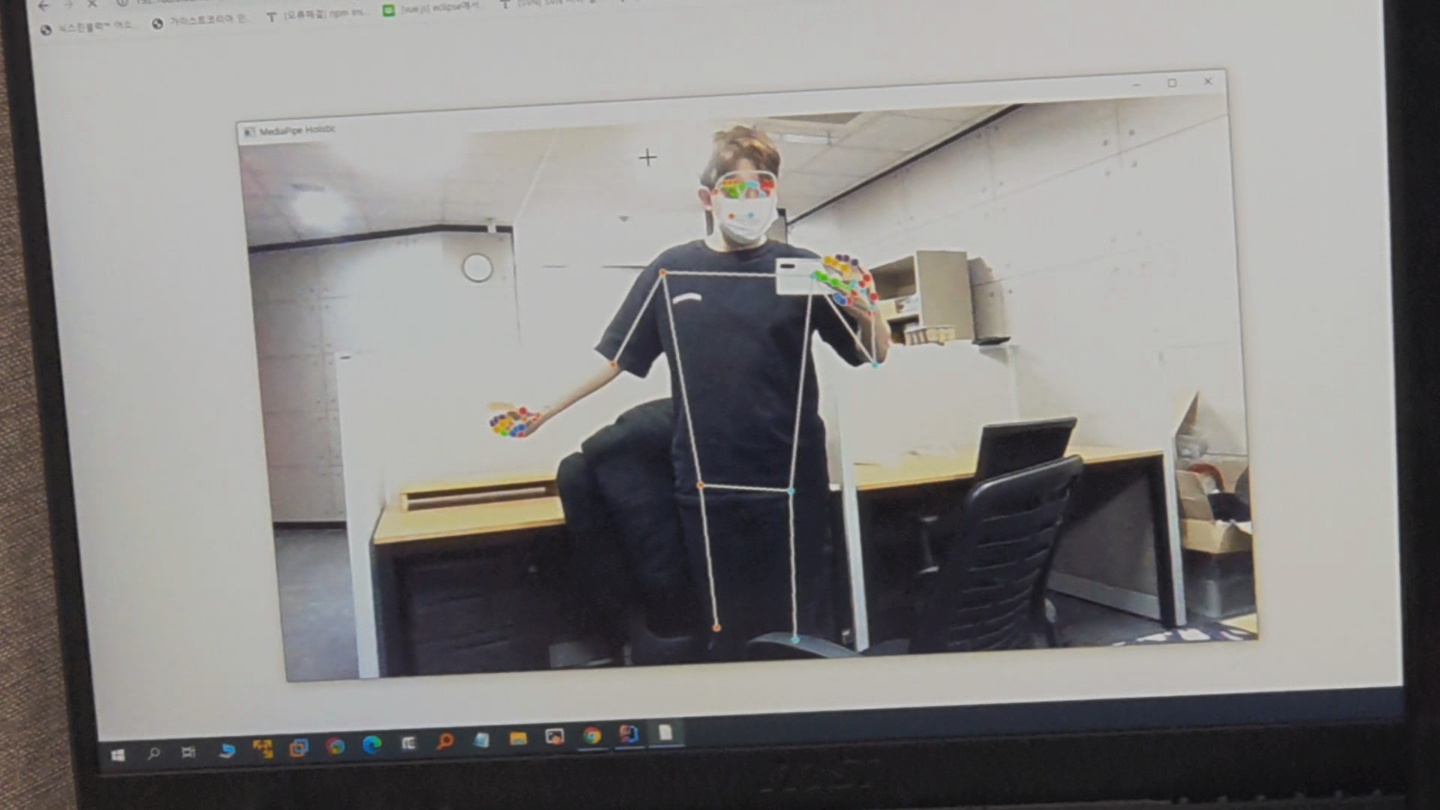

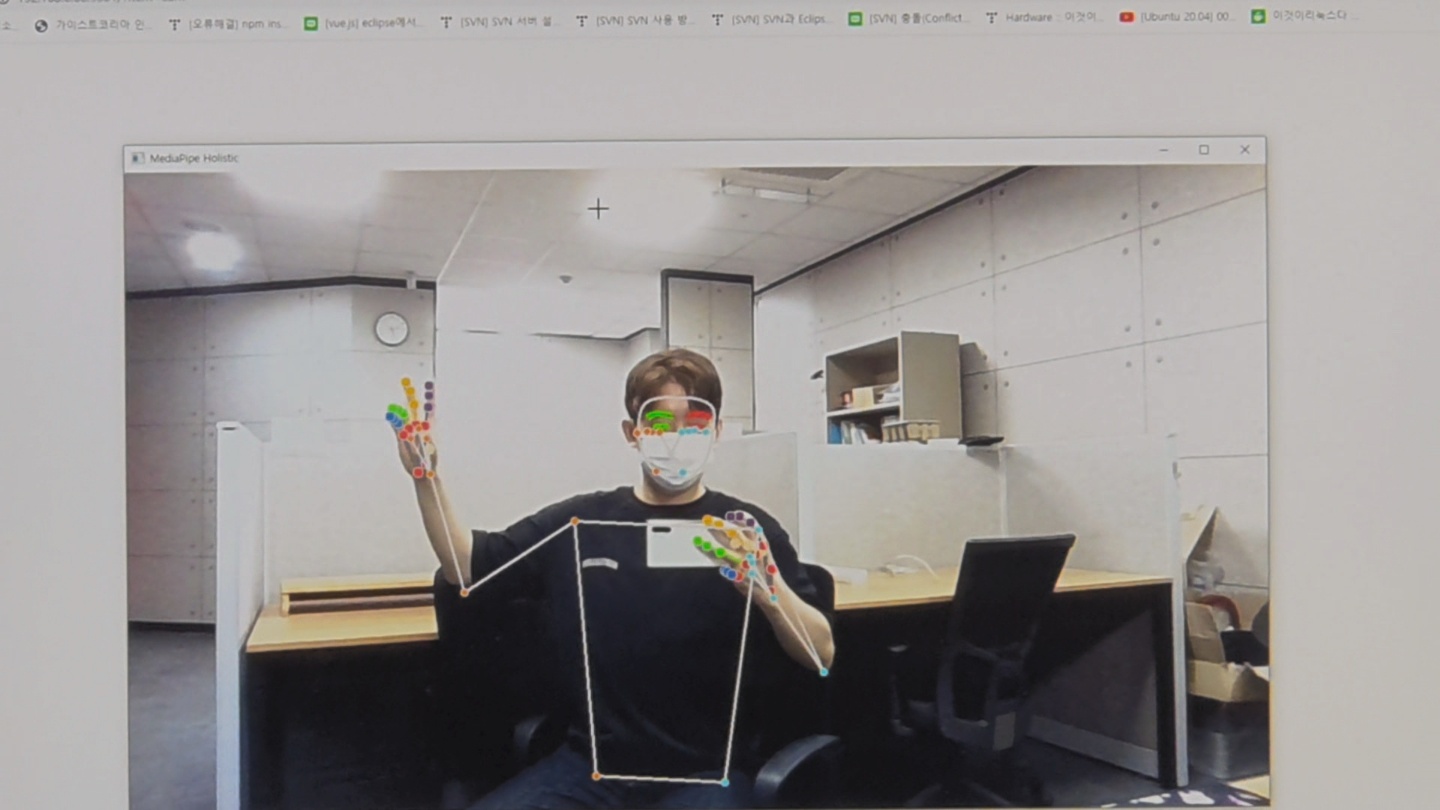

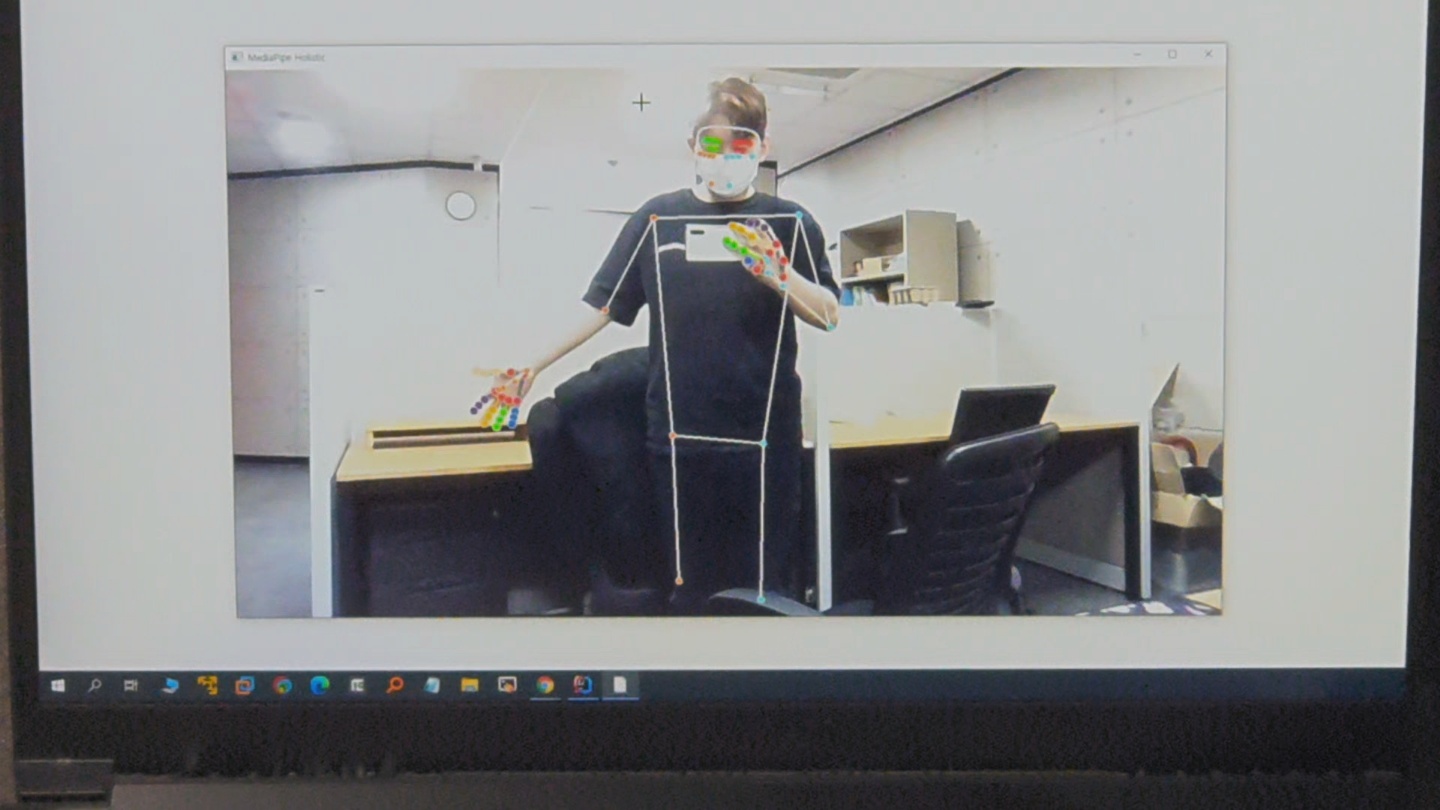

cap = cv2.VideoCapture(0)

#webcam window size

cap.set(cv2.CAP_PROP_FRAME_WIDTH, 3200)

cap.set(cv2.CAP_PROP_FRAME_HEIGHT, 1800)

with mp_holistic.Holistic(

min_detection_confidence=0.5,

min_tracking_confidence=0.5) as holistic:

hands = mp_hands.Hands(

model_complexity=0,

min_detection_confidence=0.5,

min_tracking_confidence=0.5)

while cap.isOpened():

success, image = cap.read()

if not success:

print("Ignoring empty camera frame.")

# If loading a video, use 'break' instead of 'continue'.

continue

# To improve performance, optionally mark the image as not writeable to

# pass by reference.

image.flags.writeable = False

imageRGB = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

results = holistic.process(imageRGB)

results1 = hands.process(imageRGB)

print(results.pose_landmarks)

# Draw the hand annotations on the image.

image.flags.writeable = True

imageRGB = cv2.cvtColor(image, cv2.COLOR_RGB2BGR)

### hands start ######

# draw hands

if results1.multi_hand_landmarks:

for hand_landmarks in results1.multi_hand_landmarks:

mp_drawing.draw_landmarks(

image,

hand_landmarks,

mp_hands.HAND_CONNECTIONS,

mp_drawing_styles.get_default_hand_landmarks_style(),

mp_drawing_styles.get_default_hand_connections_style()

)

#### hands end ####

mp_drawing.draw_landmarks(

image,

results.face_landmarks,

mp_holistic.FACEMESH_CONTOURS,

landmark_drawing_spec=None,

connection_drawing_spec=mp_drawing_styles

.get_default_face_mesh_contours_style())

mp_drawing.draw_landmarks(

image,

results.pose_landmarks,

mp_holistic.POSE_CONNECTIONS,

landmark_drawing_spec=mp_drawing_styles

.get_default_pose_landmarks_style())

# Flip the image horizontally for a selfie-view display.

cv2.imshow('MediaPipe Holistic', cv2.flip(image, 1))

if cv2.waitKey(5) & 0xFF == 27:

break

cap.release()>> pose_estimation.py

# This is a _very simple_ example of a web service that recognizes faces in uploaded images.

# Upload an image file and it will check if the image contains a picture of Barack Obama.

# The result is returned as json. For example:

#

# $ curl -XPOST -F "file=@obama2.jpg" http://127.0.0.1:5001

#

# Returns:

#

# {

# "face_found_in_image": true,

# "is_picture_of_obama": true

# }

#

# This example is based on the Flask file upload example: http://flask.pocoo.org/docs/0.12/patterns/fileuploads/

# NOTE: This example requires flask to be installed! You can install it with pip:

# $ pip3 install flask

import face_recognition

from flask import Flask, jsonify, request, redirect

# You can change this to any folder on your system

ALLOWED_EXTENSIONS = {'png', 'jpg', 'jpeg', 'gif'}

app = Flask(__name__)

def return_func():

return '''

<!doctype html>

<title>Is this a picture of Obama?</title>

<h1>Upload a picture and see if it's a picture of Obama!</h1>

<form method="POST" enctype="multipart/form-data" action="/">

<input type="file" name="file">

<input type="submit" value="Upload">

<br>

<hr>

<br>

</form>

<input type="button" value="모션 디렉터" onclick="location.href='/?item=cam'">

'''

# motion tracking module run

def motion_webcam():

print ("motion_webcam is active")

return_func()

exec(open("pose_estimation.py").read())

def allowed_file(filename):

return '.' in filename and \

filename.rsplit('.', 1)[1].lower() in ALLOWED_EXTENSIONS

@app.route('/', methods=['GET', 'POST'])

def upload_image():

print( request.args.get('item') == 'cam')

# motion director request filter

if request.args.get('item') == 'cam':

print('cam connection ! : ')

motion_webcam()

# Check if a valid image file was uploaded

if request.method == 'POST':

if 'file' not in request.files:

return redirect(request.url)

file = request.files['file']

if file.filename == '':

return redirect(request.url)

if file and allowed_file(file.filename):

# The image file seems valid! Detect faces and return the result.

return detect_faces_in_image(file)

# If no valid image file was uploaded, show the file upload form:

return return_func()

def detect_faces_in_image(file_stream):

# Pre-calculated face encoding of Obama generated with face_recognition.face_encodings(img)

known_face_encoding = [-0.09634063, 0.12095481, -0.00436332, -0.07643753, 0.0080383,

0.01902981, -0.07184699, -0.09383309, 0.18518871, -0.09588896,

0.23951106, 0.0986533 , -0.22114635, -0.1363683 , 0.04405268,

0.11574756, -0.19899382, -0.09597053, -0.11969153, -0.12277931,

0.03416885, -0.00267565, 0.09203379, 0.04713435, -0.12731361,

-0.35371891, -0.0503444 , -0.17841317, -0.00310897, -0.09844551,

-0.06910533, -0.00503746, -0.18466514, -0.09851682, 0.02903969,

-0.02174894, 0.02261871, 0.0032102 , 0.20312519, 0.02999607,

-0.11646006, 0.09432904, 0.02774341, 0.22102901, 0.26725179,

0.06896867, -0.00490024, -0.09441824, 0.11115381, -0.22592428,

0.06230862, 0.16559327, 0.06232892, 0.03458837, 0.09459756,

-0.18777156, 0.00654241, 0.08582542, -0.13578284, 0.0150229 ,

0.00670836, -0.08195844, -0.04346499, 0.03347827, 0.20310158,

0.09987706, -0.12370517, -0.06683611, 0.12704916, -0.02160804,

0.00984683, 0.00766284, -0.18980607, -0.19641446, -0.22800779,

0.09010898, 0.39178532, 0.18818057, -0.20875394, 0.03097027,

-0.21300618, 0.02532415, 0.07938635, 0.01000703, -0.07719778,

-0.12651891, -0.04318593, 0.06219772, 0.09163868, 0.05039065,

-0.04922386, 0.21839413, -0.02394437, 0.06173781, 0.0292527 ,

0.06160797, -0.15553983, -0.02440624, -0.17509389, -0.0630486 ,

0.01428208, -0.03637431, 0.03971229, 0.13983178, -0.23006812,

0.04999552, 0.0108454 , -0.03970895, 0.02501768, 0.08157793,

-0.03224047, -0.04502571, 0.0556995 , -0.24374914, 0.25514284,

0.24795187, 0.04060191, 0.17597422, 0.07966681, 0.01920104,

-0.01194376, -0.02300822, -0.17204897, -0.0596558 , 0.05307484,

0.07417042, 0.07126575, 0.00209804]

# Load the uploaded image file

img = face_recognition.load_image_file(file_stream)

# Get face encodings for any faces in the uploaded image

unknown_face_encodings = face_recognition.face_encodings(img)

face_found = False

is_obama = False

if len(unknown_face_encodings) > 0:

face_found = True

# See if the first face in the uploaded image matches the known face of Obama

match_results = face_recognition.compare_faces([known_face_encoding], unknown_face_encodings[0])

if match_results[0]:

is_obama = True

# Return the result as json

result = {

"face_found_in_image": face_found,

"is_picture_of_obama": is_obama

}

return jsonify(result)

if __name__ == "__main__":

app.run(host='0.0.0.0', port=5001, debug=True)

>> web_service_example.py

위 두 소스를 추가 .

해당 경로 web_service_example.py 실행 .

============================================================================

OS환경 :

Windows 10 Pro /

Intel(R) Core(TM) i7-10750H CPU @ 2.60GHz 2.59 GHz /

RAM 16.0GB /

GeForce GTX 1650 Ti

개발환경 :

tensorflow 2.7.0/

opencv 4.5.5 /

python 3.7 /

cuda 10.1 /

cudnn 7.6.5 /

anaconda 4.11.0(파이썬 가상환경)/

visual studio c++ 2017/ CMAKE

API >>

face_recognition / dlib

mediapipe / picamera / flask / pickle

[환경변수]

1. anaconad

D:\ssss\company work\projects\dev_env\anaconda\Library\bin

D:\ssss\company work\projects\dev_env\anaconda\Scripts

D:\ssss\company work\projects\dev_env\anaconda

2. python

D:\ssss\company work\projects\dev_env\pyhon3.10.2

D:\ssss\company work\projects\dev_env\pyhon3.10.2\Scripts

D:\ssss\company work\projects\dev_env\anaconda\Library\mingw-w64\bin

3. CUDA

D:\ssss\company work\projects\dev_env\anaconda\Lib\site-packages\cmake\data\share\cmake-3.22\Modules\FindCUDA

C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v10.0\bin

C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v10.0\include

C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v10.0\lib

4. CUDNN

C:\Users\NSJ\Downloads\cudnn-10.0-windows10-x64-v7.6.5.32\cuda

C:\Users\NSJ\Downloads\cudnn-10.0-windows10-x64-v7.6.5.32\cuda\bin

C:\Users\NSJ\Downloads\cudnn-10.0-windows10-x64-v7.6.5.32\cuda\include

5. CMAKE

C:\Program Files (x86)\Microsoft Visual Studio\2017\Community\Common7\IDE\CommonExtensions\Microsoft\CMake\CMake

C:\Program Files (x86)\Microsoft Visual Studio\2017\Community\Common7\IDE\CommonExtensions\Microsoft\CMake\CMake\bin

ref )

https://github.com/ageitgey/face_recognition --> import

https://google.github.io/mediapipe/solutions/hands#python-solution-api

'잡동사니&공부' 카테고리의 다른 글

| OOP & FP (0) | 2022.11.08 |

|---|---|

| 이클립스 설정 (자바) (0) | 2022.10.04 |

| [MSA] 비동기 BACKING SERVICE (0) | 2022.08.29 |

| Ubuntu18.04 구축 (AI PROJECT: 텐서플로우) (0) | 2022.03.16 |

| LGPL - 3.0 라이선스 관련 ( feat. BBB ) (0) | 2021.12.01 |